Monthly ML: Data Science & Machine Learning Wrap-Up — March 2020

Thanks for checking out my Data Science and Machine Learning blog series. Every month, I’ll be highlighting new topics that I’ve explored, projects that I’ve completed and helpful resources that I’ve used along the way. I’ll also be talking about pitfalls, including diversions, wrong turns and struggles. By sharing my experiences, I hope to inspire and help you on your path to become a better data scientist.

Greetings! Another month, done. As the world dances with chaos, I hope that, rather than dwelling on the negative, you are finding the motivation to improve yourself. The internet is a tremendous resource, if only we resist the addictive, empty content designed to steal our attention.

Before I get into what I’ve been up to, I wanted to invite you to follow my #100DaysOfCode journey on Twitter. Yesterday was the 45th day — already! I look forward to continuing to connect with other learners, professors, engineers and data scientists. Send me a message if you’re doing the challenge and I will happily follow you too.

With that, let’s recap the highlights of March.

Inspired by current events, I spent the first few days of the month collating my own COVID-19 dataset. This was an interesting challenge due to the data being contained within WHO PDF bulletins, rather than simple HTML. As usual, if there’s a will, there’s a Python library that exists to achieve it — in this case, TabulaPy.

At this point, I had an urge to play with cellular automata, a topic that I had studied during a class called Artificial Life, Culture & Evolution while at Duke University. In revisiting Conway’s Game of Life, my goal was to create a command-line interface that enables users to generate universes to their specifications. In particular, I wanted the flexibility to customize the size, length and frame rate of the animation as well as the content itself, namely the starting seed and the color scheme. Of course, I couldn’t help but add some “mayhem”, enabling users to seed a little bit of chaos with the optional command-line argument --mayhem True.

Happy with my first CLI application, I also learned what makes a good Github README and the proper way to document code for reusability.

The next project I tackled was something I’ve been wanting to do for a few months: re-design my custom portfolio website. I had a good skeleton already in place, but it was out-of-date, reflecting an old me with different priorities. The new and improved site, located at amypeniston.com, showcases my data science projects, Kaggle contributions and Github portfolio at the forefront. I also set up a WordPress blog to house project-related narratives. Very pleased with the final product, plus I got to practice the old web dev skills.

At this point, I went all in on neural networks, fueled by the first 10 chapters of Deep Learning Illustrated. I must say, I’ve experimented and enjoyed many different topics within machine learning so far, but neural networks excite me the most.

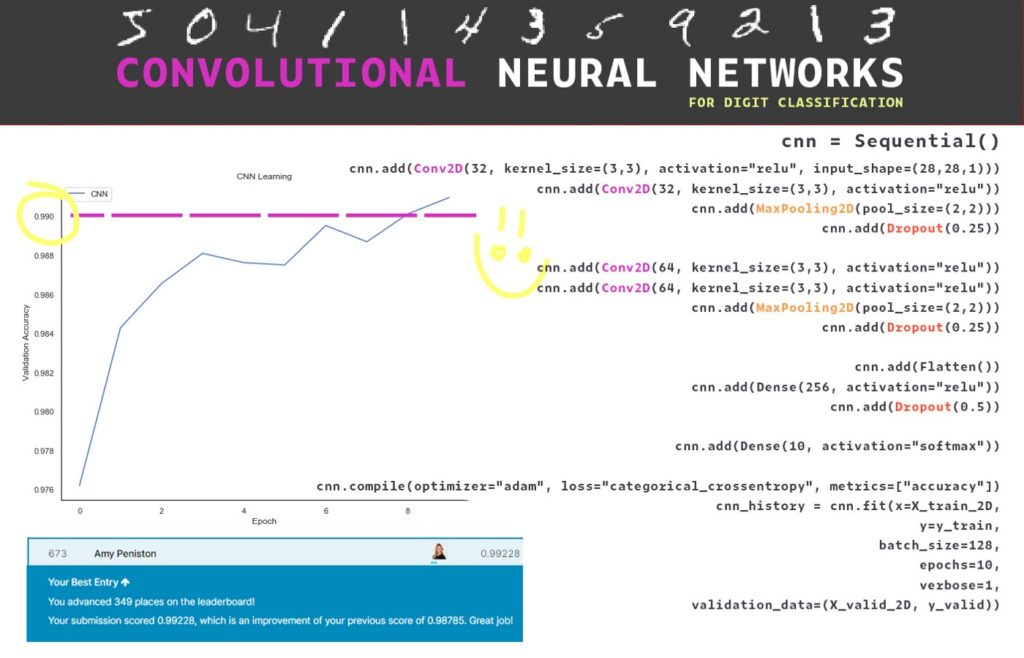

Anyway, I started building models to classify handwritten MNIST digits — the Fizz Buzz of deep learning. I established a baseline score using Logistic Regression and then proceeded to blow that score away using a series of increasingly appropriate neural networks. Terminology like dropout, activation functions and convolutional layers jumped off the page and into reality. Eventually, I implemented a LeNet-5-esque model and published my experimentation to Kaggle.

Midway through March, I started a new course to learn more about the Hadoop ecosystem. Big data buzzwords abound at the moment and I’m working to familiarize myself with the whole stack: HDFS (Hortonworks), Hive, Pig, Spark etc. etc. It’s also exposing me to the concept of interacting with virtual machines.

Towards the end of the month, I started my favorite project so far: Squirrel GAN. The goal was to generate human-like drawings using Google’s Quick Draw! data. To do this, I built and experimented with a generative adversarial network, culminating in some pretty convincing squirrels!

Other interesting projects/notes from this month:

- Compiled a reusable set of common NLP preprocessing techniques including code to visualize word embeddings (SO COOL)

- Used an LSTM network to classify IMDB movie review sentiment

- Derived various machine learning processes, including backpropagation in neural networks and stochastic gradient descent

- Learned about Heroku deployment and Agile story points during a few pair-programming sessions

- Finished Deep Learning Illustrated — highly, highly recommend this book

Looking forward to next month: unfortunately, all of my plans to attend Google Cloud Next, Kaggle Days and Open Data Science Conference have been canceled. I am very disappointed but it shan’t stop me from learning.

In April, I will be participating in LeetCode’s 30-day challenge, which will be great practice for solving algorithmic problems, while being cognizant of time and space complexity.

I do want to jump back into Kaggle competitions, but haven’t decided which one yet. It will likely involve — get ready — neural networks!!

Finally, I plan to to complete the big data course that I’m working on and build a functioning pipeline that crystallizes my understanding. I have an idea that will include real-time data streaming, but I’m still hashing out the details.

With that, March is a wrap! Thanks again for reading and, until next time, happy coding.